Agent and Multi-Agent System

We will explore the definition of agents in artificial intelligence (AI) while highlighting the distinctions between objects, experts, and agents. Furthermore, we will discuss the characteristics of multi-agent systems and how they compare to swarm intelligence.

Definition of An Agent

An agent is a computational entity whether a software program, hardware component, or a combination of both designed to achieve specific tasks or goals. It is defined by its capacity to perceive its environment, process the gathered information, and make decisions that affect the surrounding environment. LeCun [1] highlighted six following intelligent properties of an agent:

- Perception: The agent senses information from the environment and estimates the current state.

- Memory: The agent stores past, present, and predicted future states of the environment, along with their associated costs. This stored information is accessible and modifiable by the world model, allowing the agent to make decisions over time or correct inconsistencies and gaps in its understanding of the environment.

- World Model: The agent leverages its current knowledge and past experiences to fill in missing information and predict future states of the environment.

- Actor: The agent selects the optimal sequence of actions from available plans to minimize future costs and achieve its objectives.

- Cost: The agent evaluates its actions and knowledge based on a cost function, consisting of two key components:

- Intrinsic Cost: This is predefined by the agent’s behavior, remaining constant throughout learning. It reflects the nature of the agent’s behavior over time.

- Critic: This is trainable during the learning process and estimates long-term outcomes based on the current state and the agent's past experiences.

- Configurator (a.k.a. Context Awareness): The agent adjusts its configurations across other properties in response to changes in the environment, ensuring it stays adaptable and aligned with its goals.

While these properties are necessary for developing an intelligent agent, they may not be necessarily required for all agents. For example,

- A traffic light operates on a fixed timing cycle without needing to perceive real-time traffic conditions or adapt to changing circumstances.

- A simple robotic arm designed for a specific task does not build a world model. Instead, it depends solely on predefined movements.

- A simple light timer turns lights on or off after a specific duration. It does not consider the cost of electricity or optimal usage times.

Real-world examples of an intelligent agent include,

- Robots in factories or warehouses

- Autonomous driving vehicles

- Chatbots with Large Language Models (LLMs)

- Recommendation Systems (e.g., Music recommendation on Spotify, video recommendation on YouTube, etc.)

- Virtual Assistants (e.g., Siri, Google Assistant, Alexa, etc.)

- Unmanned Aerial Vehicles (UAVs) (e.g., search and rescue drones, military drones, etc.)

- AI agents in video games

Object, Expert and Agent

In computer science, the concepts of objects in object-oriented programming and experts in expert systems are widely recognized. However, differentiating these concepts from agents in multi-agent systems can often be challenging. Each of these paradigms—object-oriented programming, expert systems, and multi-agent systems—serves distinct purposes, making it essential to clearly characterize them. As outlined by Dorri et al. [2], four primary attributes are utilized to differentiate between objects, experts, and agents (refer to Table 1).

| Object | Expert | Agent | |

|---|---|---|---|

| Purpose | To model entities and behaviors | To emulate human expert decision-making | To coordinate and communicate for autonomous problem-solving |

| Communication and Coordination | Function calls | Rule-based reasoning | Interaction protocols |

| Learning Ability | Static code | Limited learning modules | Learn and adapt autonomously |

| Environment | Static | Static | Static and dynamic |

Some examples of each concept include,

- Object

- "Book" objects may encapsulate properties like title, author, and ISBN.

- Player" objects store attributes like health, position, or score.

- Expert

- A machine learning model classifies transactions as fraud based on past data patterns.

- A machine learning model suggests products or movies based on previous user interactions and preferences.

- Agent

- A robotic vacuum cleaner acts as an agent, moving and cleaning autonomously while avoiding obstacles.

- Drones explore a disaster area, detect survivors using sensors, and adapt their behavior based on terrain and environmental conditions.

Multi-Agent Systems

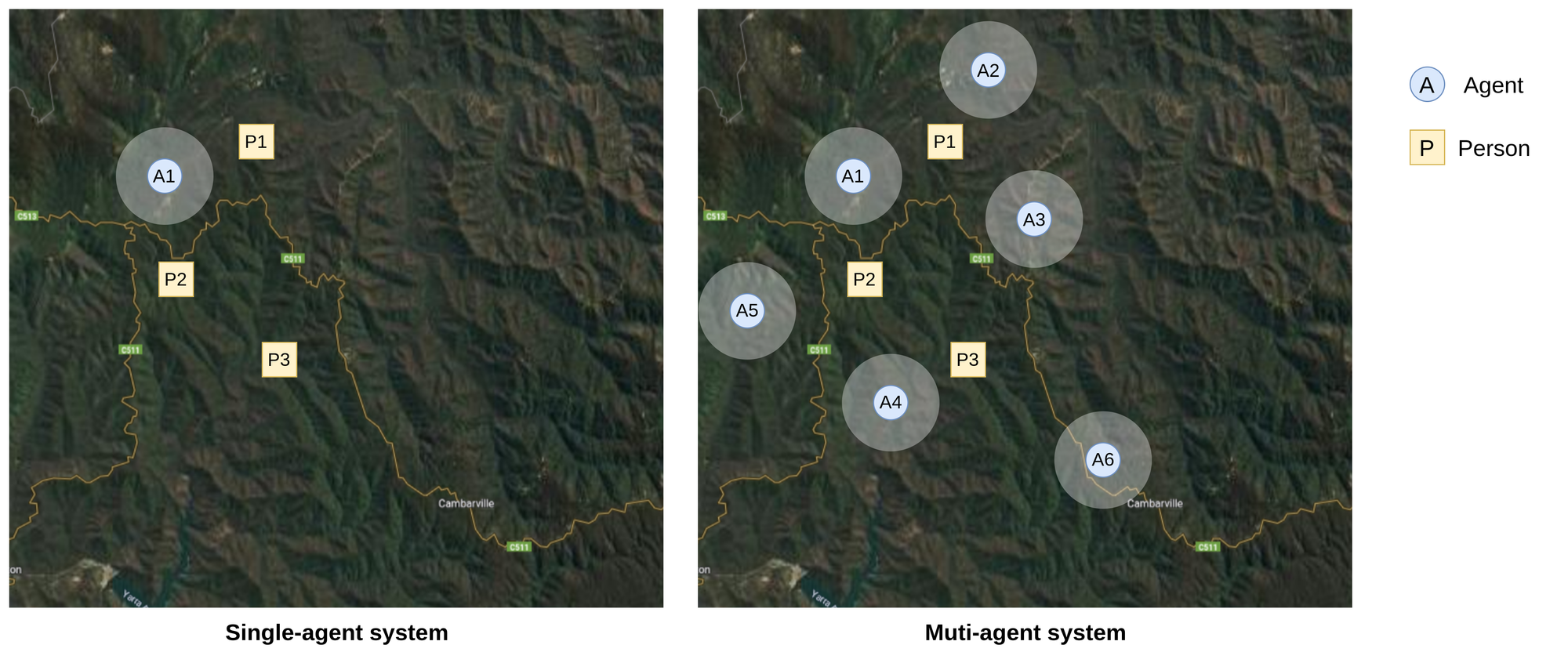

A Multi-Agent System (MAS) consists of multiple agents that may cooperate, compete, or act independently within a shared environment. In MAS, agents have both individual and collective goals, which adds complexity to the management of communication and coordination among them. To tackle this challenge, two primary aspects of MAS, such as organizational structure and consensus, are employed.

- An organizational structure consists of roles, relationships, and authority. Its purpose is to simplify the models of agents, regulate their behavior, and minimize uncertainty. Details of multi-agent organizational structures can be found in [3].

- Consensus refers to a mutual agreement on a shared value or state among agents. It aims to enable stable information exchange between agents while they work towards achieving their goals. Details of multi-agent consensus can be found in [4, 5, 6].

MAS is utilized to overcome the limitations associated with single-agent systems, which include inefficiency, high costs, and unreliability. For instance:

- In search and rescue operations, a single agent may take a long time to explore the entire area and locate individuals, especially as people move within the environment. To facilitate effective exploration, multiple agents need to navigate the area simultaneously (refer to Figure 3).

- In the context of autonomous vehicles (i.e., individual agents), effective communication and coordination are essential to prevent traffic collisions (refer to Figure 4).

- Managing the vast amount of information required to control traffic flow in smart cities can be overwhelming for a single-agent system. Distributing tasks across a multi-agent system can lead to greater efficiency, scalability, and reliability.

Other applications of MAS include,

- Autonomous navigation

- Supply chain management

- Internet of Things (IoT)

- Disaster relief management

- Energy efficiency and sustainability

- Digital assistance

- Education

- Economic modelling

- Simulating human society for studies in social science

Multi-Agent Systems and Swarm Intelligence

Swarm Intelligence (SI) can be a subset of multi-agent systems (MAS). It focuses on simple, decentralized agents that contribute to emergent collective behaviors [7]. The design principles of MAS differ from those of SI because they address distinct problem sets. Therefore, understanding the differences between MAS and SI is essential for selecting the most suitable approach.

SI represents a collective behavior found in decentralized, self-organizing systems, inspired by natural phenomena observed in animal groups, such as flocks of birds, schools of fish, and ant colonies [7]. In swarm systems, individual agents follow simple rules and engage in local interactions with each other. This leads to complex, coordinated group behaviors without centralized control or leadership. Such emergent behaviors allow swarms to adapt to changing environments, make collective decisions, and effectively tackle challenges. Table 2 illustrates the distinctions between MAS and SI across five aspects.

| SI | MAS | |

|---|---|---|

| Control Structure | Decentralized | Decentralized, centralized, or hybrid |

| Interaction | Primarily local interactions; minimal communication | Local or global communication based on protocols |

| Agent Reasoning | Simple rules for each agent | Situation-oriented or goal-oriented reasoning for each agent |

| Goal Orientation | Collective goals emerge from individual actions | Individual goals or shared goals |

| Decision-Making | Decisions made through local interactions or collective agreements | Independent decisions made by an agent, and collective decisions made through agent coordination |

Some applications of SI include,

- Networking and communication optimization [8]

- Optimization in drug discovery

- Economic modelling

- Simulation of social behavior

- Optimization in energy distribution and consumption

- Wildlife tracking and conservation

References

- LeCun, Y., 2022. A path towards autonomous machine intelligence version 0.9. 2, 2022-06-27. Open Review, 62(1), pp.1-62.

- Dorri, A., Kanhere, S.S. and Jurdak, R., 2018. Multi-agent systems: A survey. Ieee Access, 6, pp.28573-28593.

- Horling, B. and Lesser, V., 2004. A survey of multi-agent organizational paradigms. The Knowledge engineering review, 19(4), pp.281-316.

- Qin, J., Ma, Q., Shi, Y. and Wang, L., 2016. Recent advances in consensus of multi-agent systems: A brief survey. IEEE Transactions on Industrial Electronics, 64(6), pp.4972-4983.

- Li, Y. and Tan, C., 2019. A survey of the consensus for multi-agent systems. Systems Science & Control Engineering, 7(1), pp.468-482.

- Amirkhani, A. and Barshooi, A.H., 2022. Consensus in multi-agent systems: a review. Artificial Intelligence Review, 55(5), pp.3897-3935.

- Bonabeau, E., 1999. Swarm Intelligence: From Natural to Artificial Systems. Oxford University Press google schola, 2, pp.25-34.

- Mavrovouniotis, M., Li, C. and Yang, S., 2017. A survey of swarm intelligence for dynamic optimization: Algorithms and applications. Swarm and Evolutionary Computation, 33, pp.1-17.