Applications of Multi-Agent Systems in Autonomous Navigation

We will explore the overview of autonomous navigation and its common applications. We also illustrate how multi-agent systems can be applied in autonomous navigation.

Introduction

The advancement of Artificial Intelligence (AI) and robotics has significantly enhanced autonomous navigation systems, enabling them to operate effectively in dynamic and unpredictable environments. Designing such systems involves managing interconnected internal processes (see also Section "Autonomous Navigation"). These processes are often handled by a single agent in many existing systems, leading to latency and task overloading challenges [1, 2]. Furthermore, autonomous navigation systems must communicate and coordinate with external entities to accomplish tasks effectively. For example, autonomous vehicles need to observe other vehicles to avoid collisions. As another example, drones in search-and-rescue missions must retrieve positional data from satellites and exchange information with other drones to complete tasks. However, devising effective communication and coordination strategies remains a complex research challenge. To address these issues, the research community has been investigating applications of multi-agent systems (MAS) that enhance both internal processes and external coordination. This post provides an overview of autonomous navigation systems and applications of MAS in autonomous navigation.

Autonomous Navigation

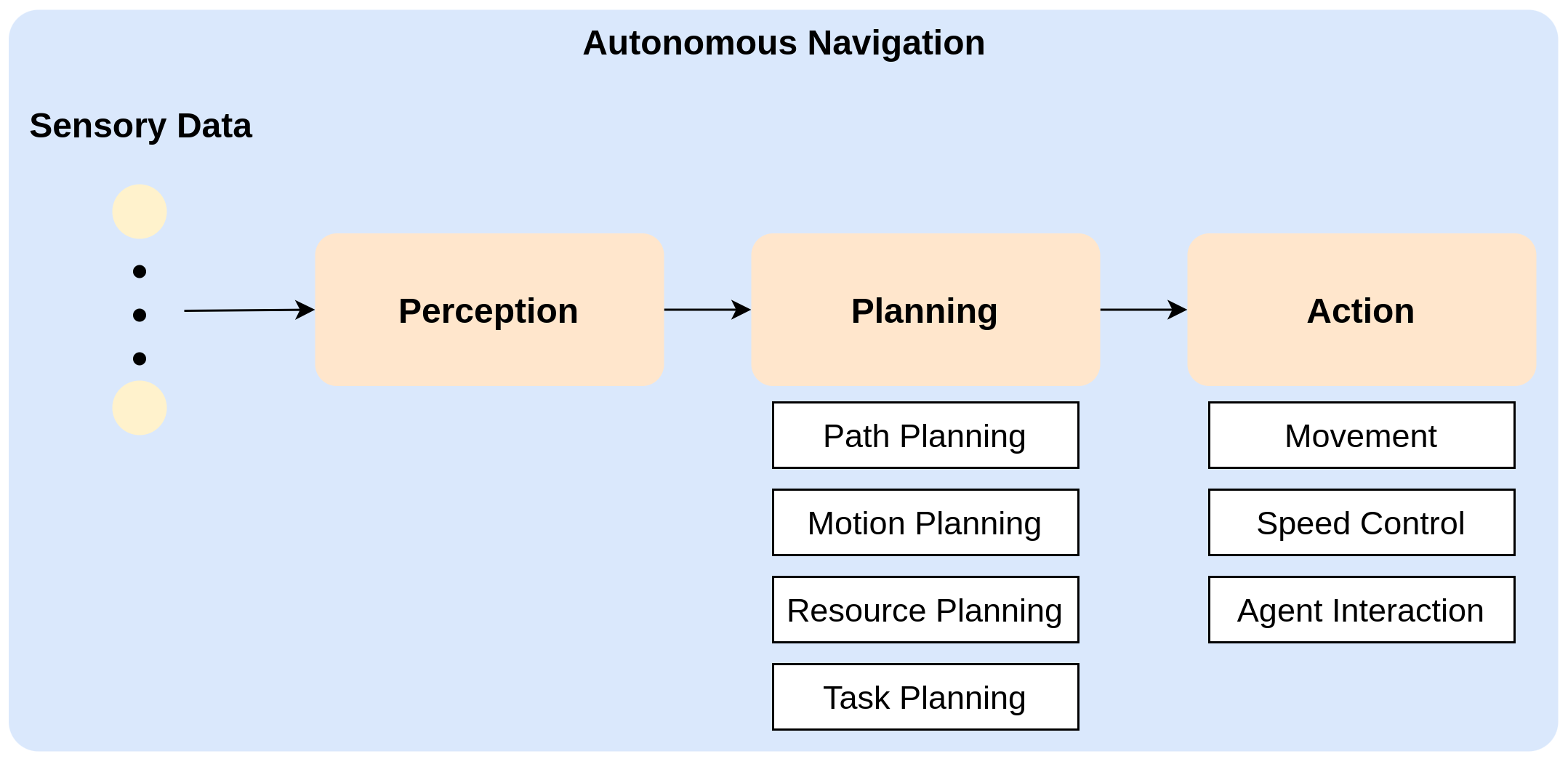

Autonomous navigation refers to the capability of a system (e.g., a robot, vehicle, or drone) to move through an environment without human intervention. This involves perceiving the information, comprehending the environment, planning a path, and executing movements to achieve tasks effectively (e.g., reaching a destination safely and efficiently, finding more targeted objects, and others) [3, 4]. It worth noting that these interconnected processes are similar to six intelligent properties highlighted in Agent and Multi-Agent System. Key tasks of an autonomous system consists of:

- Perception: Gathering and fusing data from multiple sources.

- Object detection: Detecting objects on the path.

- Object tracking: Tracking targeted objects on the path.

- Obstacle avoidance: Preventing obstacles that affect the path navigation.

- Collision avoidance: Preventing potential collision that affects task completion.

- Path planning: Planning a set of optimal paths to accomplish tasks safely and efficiently.

- Steering: Determining the appropriate direction or adjustments (e.g., turning the wheel in an autonomous car, reducing speed for avoiding collision between multiple drones, and others) while accomplishing tasks.

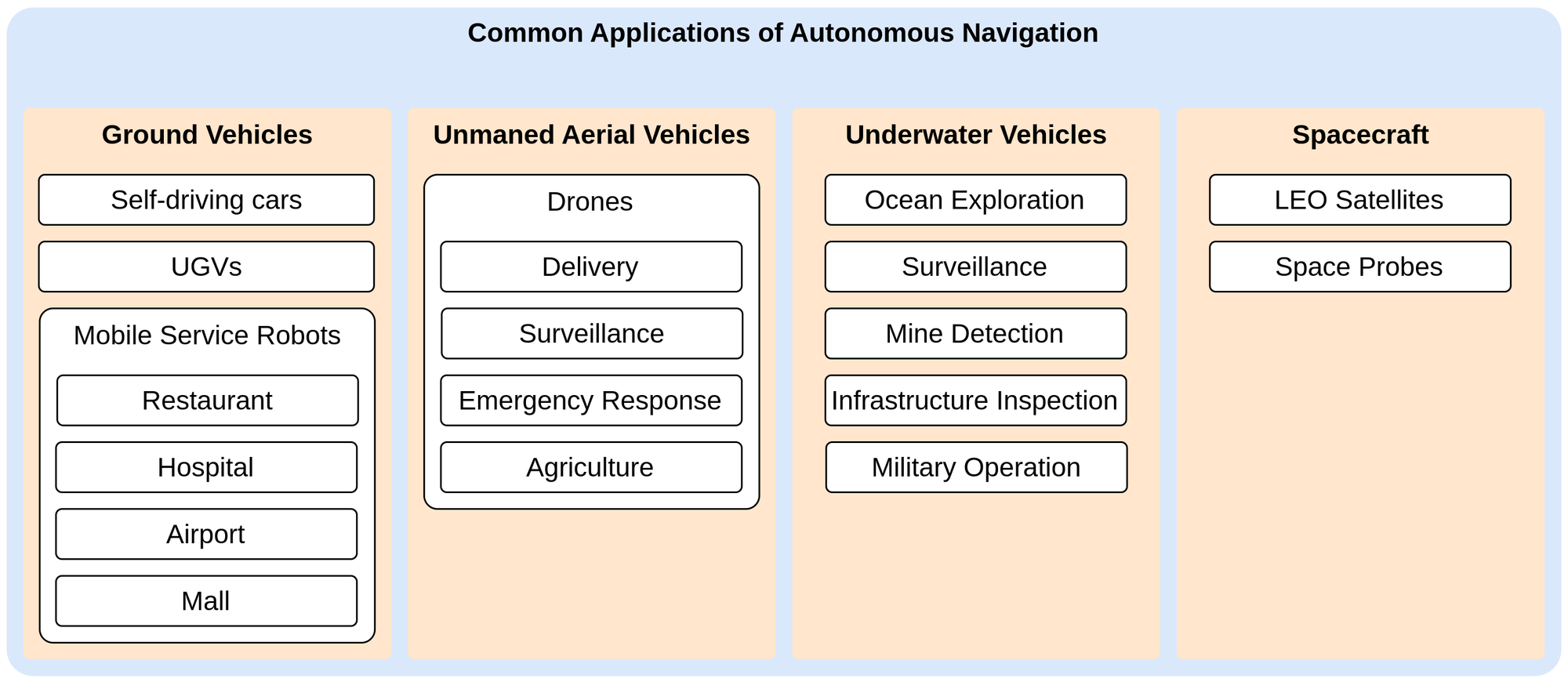

Autonomous navigation systems are not limited to indoor environments (such as robot waiters in restaurants, cleaning robots in homes, and multi-functional robots in warehouses) [5, 6, 7] but are also deployed in outdoor environments (including autonomous vehicles, drone-based delivery systems, military submarines, and more) [5, 7, 8]. Common applications of autonomous navigation systems can be categorized into four groups [4, 8, 9]:

- Ground vehicles: Self-driving cars, unmanned ground vehicles (UGVs) for military purposes, and service robots for restaurants, hospitals, airports, or malls.

- Unmanned aerial vehicles (UAVs): Autonomous drones for variety of purposes (e.g., delivery, surveillance, emergency response, and agriculture).

- Underwater vehicles: Autonomous vehicles for ocean exploration, surveillance and mine detection, and underwater infrastructure inspection.

- Spacecraft: Low earth orbit satellites and space probes.

Applications of Multi-Agent Systems in Autonomous Navigation

In real-world applications, autonomous navigation systems can utilize multi-agent frameworks to improve task efficiency and effectiveness. An autonomous vehicle can be considered an agent, and these frameworks address challenges related to both the internal and external aspects of an agent. External aspects involve interactions among multiple agents (see also Agent and Multi-Agent System), while internal aspects focus on the interactions between the internal components of an agent. For example, the internal components of a self-driving car might include cameras, a steering mechanism, a smart fuel engine, and other subsystems working together.

Multi-Agent Frameworks for External Aspects

A multi-agent framework enables agents to collaborate, share information, and make decisions that collectively enhance the overall system's performance. A few examples of multi-agent frameworks for external aspects include:

- Self-driving cars share information while navigating streets, enabling them to find optimal routes to their destinations and avoid traffic collisions [10, 11, 12, 13].

- Autonomous drones exchange data during search-and-rescue operations, providing insights into unknown environmental states that support better decision-making [14, 15, 16]. In addition, this information helps drones optimize their objectives in resource-constrained scenarios [17].

- Warehouse robots communicate and coordinate to distribute tasks efficiently and prevent collisions during operation [18].

Evaluation metrics of these multi-agent frameworks tend to focus on optimization as:

- Optimizing resource usage for task completion.

- Optimizing communication band-width.

- Optimizing domain-specific objectives (e.g., shortest time taken to reach the destination, longest duration of navigation without a collision, distributing tasks without a task conflict, etc.).

The development of multi-agent frameworks in this category encounter several challenges:

- Scalability: Although multi-agent frameworks perform well in conceptual tests, they often face scalability challenges in real-world applications where the number of agents significantly increases.

- Communication and Coordination: Handcrafting all possible interactions among agents in real-world scenarios is impractical and time-intensive. Therefore, agents need the ability to dynamically synthesize interactions based on the specific context. Developing effective strategies for such dynamic interaction synthesis remains an open challenge.

Multi-Agent Frameworks for Internal Aspects

A multi-agent framework treats internal aspects as individual agents working collaboratively to ensure seamless operation and optimal performance of the overall system. A few examples of multi-agent frameworks for internal aspects include:

- The steering engine of a self-driving car interacts with the vision engine to navigate and avoid traffic collisions [12, 19, 20].

- The steering engine of an autonomous drone interacts with the vision engine to adjust the drone's direction for obstacle and collision avoidance [21, 22, 23].

- The path-planning engine of an autonomous drone coordinates with the battery management engine to determine optimal paths in resource-constrained situations [24, 25].

- The vision engine of an autonomous drone utilizes multi-agent frameworks for object tracking [26].

Evaluation metrics of these multi-agent frameworks tend to focus on runtime performance and domain-specific task optimization. In the comparison analysis, a baseline for evaluating multi-agent frameworks in this category is a single-agent system where one agent handle all tasks.

The development of multi-agent frameworks in this category face several challenges:

- Synchronization: Developing effective strategies to ensure consistent decision-making among all agents remains an open challenge.

- Adaptability: Operating multi-agent frameworks that can reconfigure dynamically without significant downtime is a challenging problem.

References

- Crawford, N., Duffy, E.B., Evazzade, I., Foehr, T., Robbins, G., Saha, D.K., Varma, J. and Ziolkowski, M., 2024. BMW Agents--A Framework For Task Automation Through Multi-Agent Collaboration. arXiv preprint arXiv:2406.20041.

- Ferguson, D., 2006. Single agent and multi-agent path planning in unknown and dynamic environments. Carnegie Mellon University.

- Salhi, I., Poreba, M., Piriou, E., Gouet-Brunet, V. and Ojail, M., 2019. Multimodal Localization for Embedded Systems: A Survey. In Multimodal Scene Understanding (pp. 199-278). Academic Press.

- Nahavandi, S., Alizadehsani, R., Nahavandi, D., Mohamed, S., Mohajer, N., Rokonuzzaman, M. and Hossain, I., 2022. A comprehensive review on autonomous navigation. arXiv preprint arXiv:2212.12808.

- Roy, P. and Chowdhury, C., 2021. A survey of machine learning techniques for indoor localization and navigation systems. Journal of Intelligent & Robotic Systems, 101(3), p.63.

- El-Sheimy, N. and Li, Y., 2021. Indoor navigation: State of the art and future trends. Satellite Navigation, 2(1), p.7.

- Tang, Y., Zhao, C., Wang, J., Zhang, C., Sun, Q., Zheng, W.X., Du, W., Qian, F. and Kurths, J., 2022. Perception and navigation in autonomous systems in the era of learning: A survey. IEEE Transactions on Neural Networks and Learning Systems, 34(12), pp.9604-9624.

- Guastella, D.C. and Muscato, G., 2020. Learning-based methods of perception and navigation for ground vehicles in unstructured environments: A review. Sensors, 21(1), p.73.

- Turan, E., Speretta, S. and Gill, E., 2022. Autonomous navigation for deep space small satellites: Scientific and technological advances. Acta Astronautica, 193, pp.56-74.

- Doniec, A., Mandiau, R., Piechowiak, S. and Espié, S., 2008. A behavioral multi-agent model for road traffic simulation. Engineering Applications of Artificial Intelligence, 21(8), pp.1443-1454.

- Dinneweth, J., Boubezoul, A., Mandiau, R. and Espié, S., 2022. Multi-agent reinforcement learning for autonomous vehicles: A survey. Autonomous Intelligent Systems, 2(1), p.27.

- Zhang, R., Hou, J., Walter, F., Gu, S., Guan, J., Röhrbein, F., Du, Y., Cai, P., Chen, G. and Knoll, A., 2024. Multi-agent reinforcement learning for autonomous driving: A survey. arXiv preprint arXiv:2408.09675.

- Muzahid, A.J.M., Kamarulzaman, S.F., Rahman, M.A., Murad, S.A., Kamal, M.A.S. and Alenezi, A.H., 2023. Multiple vehicle cooperation and collision avoidance in automated vehicles: Survey and an AI-enabled conceptual framework. Scientific reports, 13(1), p.603.

- Queralta, J.P., Taipalmaa, J., Pullinen, B.C., Sarker, V.K., Gia, T.N., Tenhunen, H., Gabbouj, M., Raitoharju, J. and Westerlund, T., 2020. Collaborative multi-robot search and rescue: Planning, coordination, perception, and active vision. Ieee Access, 8, pp.191617-191643.

- Drew, D.S., 2021. Multi-agent systems for search and rescue applications. Current Robotics Reports, 2, pp.189-200.

- Lyu, M., Zhao, Y., Huang, C. and Huang, H., 2023. Unmanned aerial vehicles for search and rescue: A survey. Remote Sensing, 15(13), p.3266.

- Javed, S., Hassan, A., Ahmad, R., Ahmed, W., Ahmed, R., Saadat, A. and Guizani, M., 2024. State-of-the-art and future research challenges in uav swarms. IEEE Internet of Things Journal.

- da Costa Barros, Í.R. and Nascimento, T.P., 2021. Robotic mobile fulfillment systems: A survey on recent developments and research opportunities. Robotics and Autonomous Systems, 137, p.103729.

- Wang, J., Sun, H. and Zhu, C., 2023. Vision-based autonomous driving: A hierarchical reinforcement learning approach. IEEE Transactions on Vehicular Technology, 72(9), pp.11213-11226.

- Ganesan, M., Kandhasamy, S., Chokkalingam, B. and Mihet-Popa, L., 2024. A Comprehensive Review on Deep Learning-Based Motion Planning and End-To-End Learning for Self-Driving Vehicle. IEEE Access.

- Llorca, D.F., Milanés, V., Alonso, I.P., Gavilán, M., Daza, I.G., Pérez, J. and Sotelo, M.Á., 2011. Autonomous pedestrian collision avoidance using a fuzzy steering controller. IEEE transactions on intelligent transportation systems, 12(2), pp.390-401.

- Song, S., Zhang, Y., Qin, X., Saunders, K. and Liu, J., 2021, August. Vision-guided collision avoidance through deep reinforcement learning. In NAECON 2021-IEEE National Aerospace and Electronics Conference (pp. 191-194). IEEE.

- Sanyal, S., Manna, R.K. and Roy, K., 2024. EV-Planner: Energy-Efficient Robot Navigation via Event-Based Physics-Guided Neuromorphic Planner. IEEE Robotics and Automation Letters.

- Gugan, G. and Haque, A., 2023. Path planning for autonomous drones: Challenges and future directions. Drones, 7(3), p.169.

- Alyassi, R., Khonji, M., Karapetyan, A., Chau, S.C.K., Elbassioni, K. and Tseng, C.M., 2022. Autonomous recharging and flight mission planning for battery-operated autonomous drones. IEEE Transactions on Automation Science and Engineering, 20(2), pp.1034-1046.

- Zhu, P., Zheng, J., Du, D., Wen, L., Sun, Y. and Hu, Q., 2020. Multi-drone-based single object tracking with agent sharing network. IEEE Transactions on Circuits and Systems for Video Technology, 31(10), pp.4058-4070.