Adaptive Autonomous Agents, Motivation and Levels of Autonomy

AI research and industry are increasingly focused on autonomous agents, yet definitions of autonomy vary across domains. To understand its evolution, we revisit how autonomy and autonomous agents were defined in research from the 1930s to the 2000s.

Introduction

Recently, the AI research community and various industries have been increasingly focused on developing autonomous agents. However, the definitions of autonomy and autonomous agents vary across domains. This highlights the importance of revisiting how these concepts were understood before the rise of AI. In this post, we will explore how autonomy and autonomous agents were defined in research papers from the 1930s and 2000s.

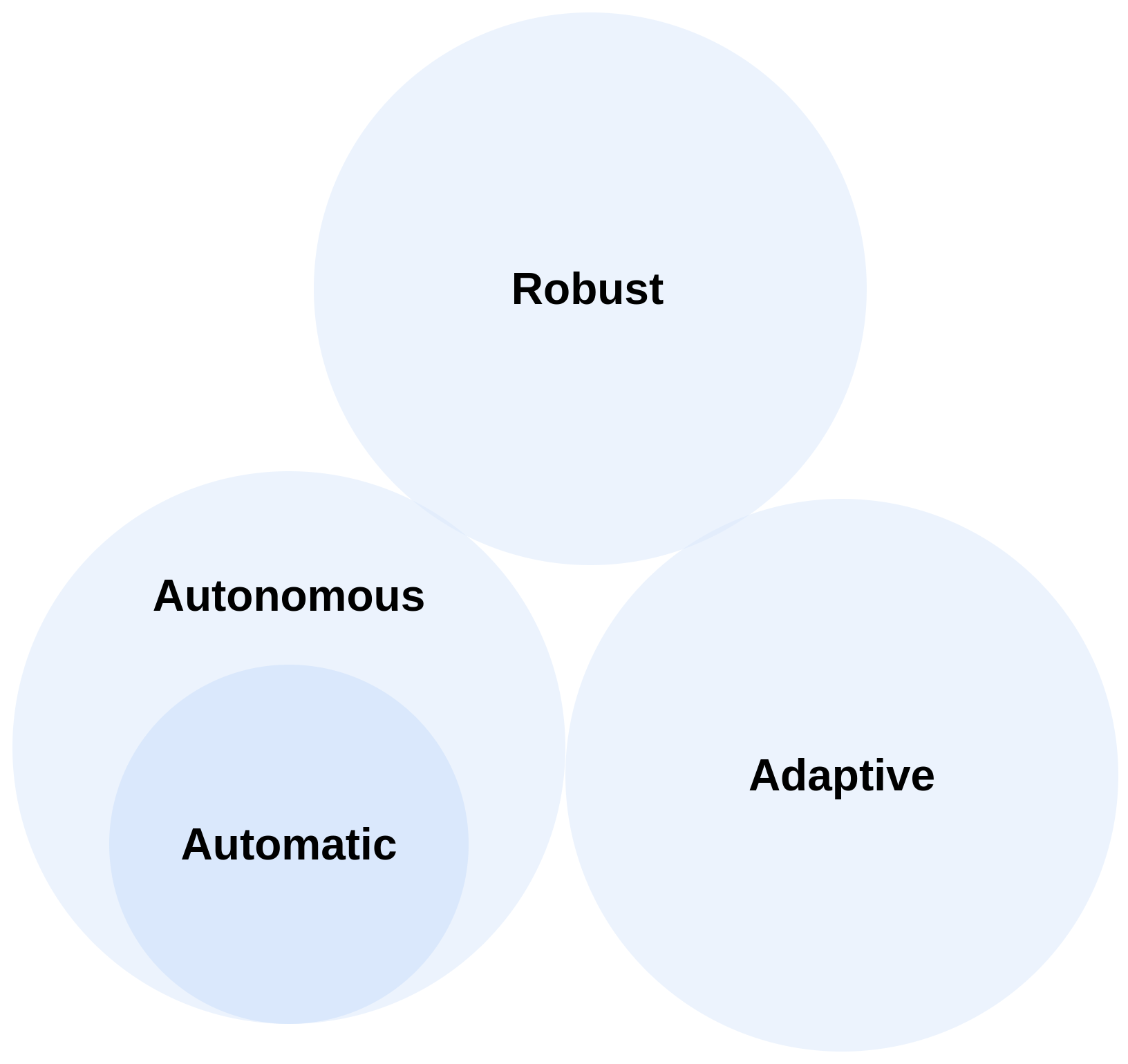

Automatic, Autonomous, Adaptive and Robust

A software is automatic when its behavior is fully defined by the given configuration. These configurations can be defined by a set of requirements or rules that dictate the software's possible commands. This may also mean that an automatic software is constrained by its predefined configurations and cannot adapt to unknown or ambiguous scenarios. This concept of being automatic was highlighted by Alan Turing in 1936 [1]. For example,

- Rule-based Chatbots: Chatbots that follow predefined rules or responses only respond according to their programmed scripts and cannot handle ambiguous or novel queries.

- Navigation Robots: Robots follow predefined paths and cannot handle unexpected events or obstacles along the way.

An autonomous software possesses all the traits of an automatic software but extends its capabilities in several ways. It can handle unexpected events and independently make decisions to achieve time-dependent goals. For instance, an autonomous software determines how to interpret sensory data and translate it into actions that successfully fulfill its goals. Moreover, its internal structures (i.e., modules) can be dynamically generated, and its operations do not need to be initiated by a user-defined goal. This concept of being autonomous was highlighted by Pattie Maes in 1993 [2].

A software is adaptive when it continuously improves its ability to achieve time-dependent goals by leveraging historical information stored in its memory [2]. As the volume of historical information grows, the software improves its performance in goal achievement. In addition, an adaptive software is situated within an environment where it can sense data and execute actions (i.e., commands) in response to changing situations. Given the rapid dynamics of its environment, it must act quickly. To enable this, the architecture of an adaptive software incorporates the following traits:

- Modules are distributed and often operate asynchronously.

- Modules have fewer layers of information processing.

- Modules require minimal computational resources.

- Modules can handle unforeseen situations, relying more on environmental feedback than on potentially faulty or outdated internal knowledge models.

A software is robust if it remains functional in unexpected situations, even when some of its modules fail [2]. In such cases, its performance may degrade, but it does not completely break down. To achieve robustness, the software architecture includes the following key traits:

- No single module is more critical than the others.

- Modules do not attempt to fully understand the current situation, as this can be time-consuming and challenging.

- Modules incorporate redundant methods to enhance reliability.

- Modules continuously update their inferences over time.

In this section, we use the term "software" as an entity described by four adjectives: automatic, autonomous, adaptive, and robust. If we consider software as an agent, these concepts remain unchanged.

Answer: It depends on the environment where we operate the agent. In environments where the cost of incorrect actions is high, an agent should prioritize anticipation. Conversely, if the cost of mistakes is negligible, frequent incorrect actions have little impact. In rapidly changing environments, an agent must act quickly. However, an agent with noisy sensors should be passive in its action selection to prevent a single faulty reading from drastically altering its behavior. In addition, an agent with many sensors can rely on environmental cues to guide its actions, whereas an agent with fewer sensors must depend more on its internal state or memory to determine its next move.

Agent, Goals and Autonomous Agent

We previously published a post [3] defining the concept of an agent. While this definition is valid from one perspective, it may lack details from other viewpoints. Hence, refining this concept is essential. In addition, we aim to study the relationship between an agent and goals. To achieve these, we refer to [4] published in 1995. Details are provided below.

An agent is an object designed to serve a specific purpose or a set of purposes. A purpose can be defined as a goal, which may be set by a human, other agents, or the agent itself. For example, if a robot has a goal, such as moving to the kitchen to pick up a cup of coffee, it is an agent. Note that an agent must be situated in an environment where it can sense and perceive data to achieve its goals.

Building on the concept of being autonomous outlined in [2], an agent is autonomous if it can set its own goals [4]. Rather than adopting goals from other agents, an autonomous agent generates its goals based on its motivations. A motivation is any desire or preference that drives the generation and adoption of goals, influencing the agent’s reasoning or behavior to fulfill those goals. While motivations are intrinsic and governed by internal, inaccessible rules, goals can be derivative, serve as measurable indicators of the agent’s behavior, and directly relate to its motivations. Importantly, an agent is autonomous because its goals are not imposed but emerge in response to its environment.

Motivation

Lucky and d’Inverno [4] discussed the relationship between an autonomous agent and its motivation. However, the concept of motivation itself was not explored in detail. In this section, we aim to delve into these concepts further and explore the connection between motivation and autonomy. To achieve this, we refer to [5], published in 1995. This research examines the relationship between autonomy and motivation in cognitive science, using examples from language learning. Here, we reinterpret these concepts using terminology relevant to this post.

In cognitive science, Deci and Ryan [6] classified motivation into two types: intrinsic and extrinsic. Intrinsic motivation refers to engaging in an activity for its own sake, driven by own purpose rather than external pressures or rewards. In contrast, extrinsic motivation arises when a task is performed for reasons beyond genuine purpose in the task itself. For example, an agent may undertake a task due to external pressure or obligation rather than its own desire.

An agent's motivation is influenced by the events it perceives from its environment. Informational events (e.g., strategies and opportunities) enhance the agent's self-determination, fostering intrinsic motivation. In contrast, controlling events (e.g., grades and predefined rewards) drive extrinsic motivation. It is important to note that "feedback" can be either informational or controlling, so the term should be used with caution.

An agent's motivation is also shaped by factors such as its abilities, task difficulty, effort, and the uncertainty of the environment. For example, an agent may be motivated to take action based on the experiences or goals it seeks to pursue or avoid, as well as the level of effort it is willing to exert in that pursuit. Furthermore, the following factors contribute the increase in agent's motivation:

- Levels of autonomy.

- Understanding metrics to its success.

- Having more effective strategies to overtake its failure.

Levels of Autonomy

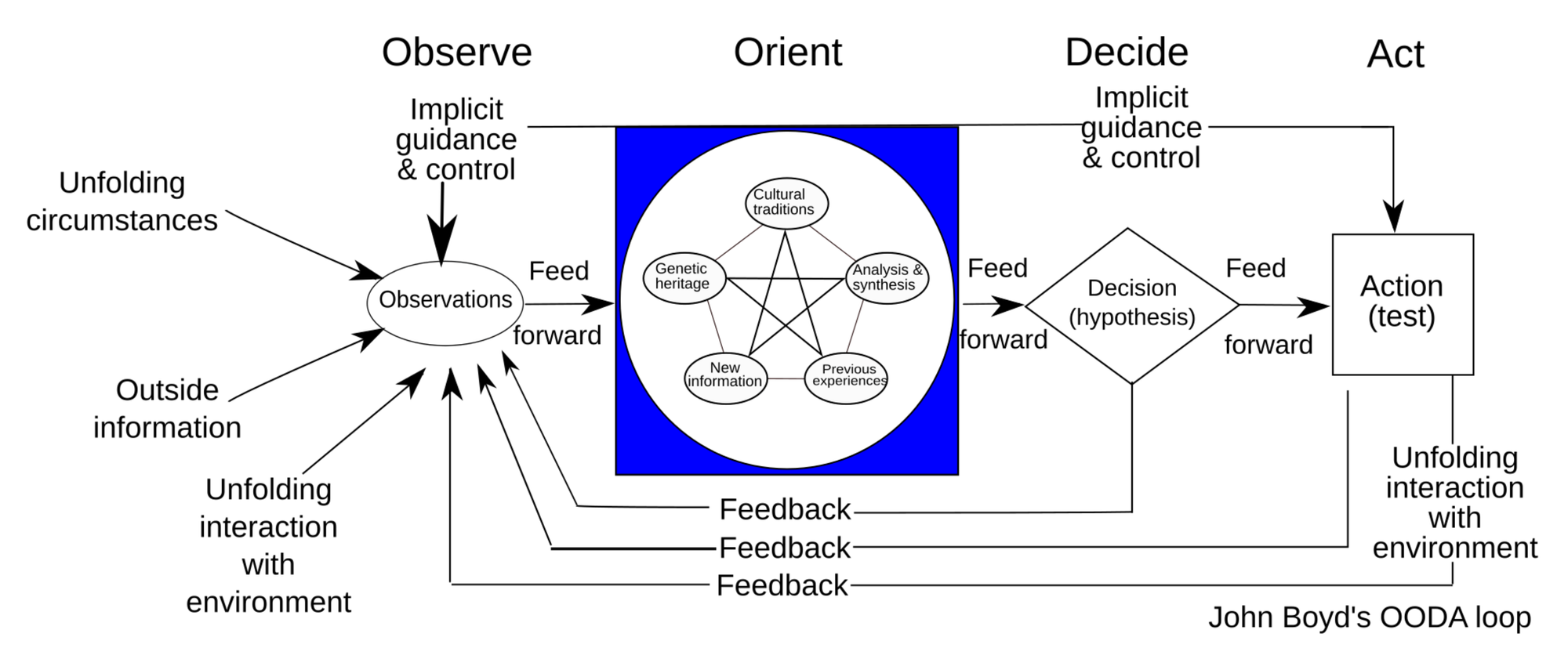

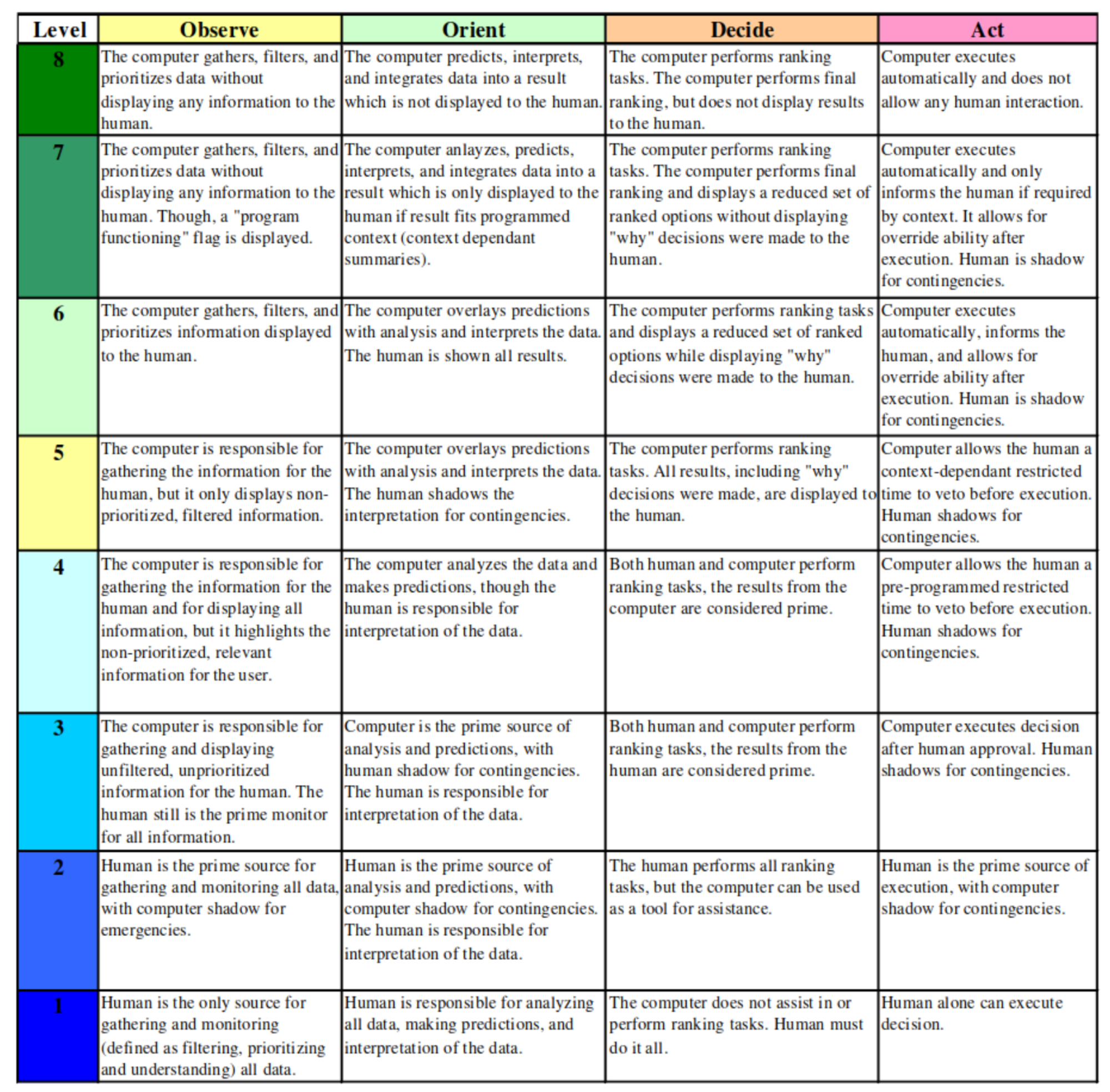

In this section, we explore levels of autonomy. To achieve this, we refer to methods developed by NASA for autonomous flight management systems [7]. This research, published in 2003, defines levels of autonomy by integrating Sheridan's scale of human-machine automation [8] with the Observe-Orient-Decide-Act (OODA) loop [9]. Details are provided below.

Sheridan outlined 10 levels of human-machine automation [8]:

- Human must do everything.

- The machine offers everything that it knows, and human must perform an action or a set of actions.

- The machine filters options, and human must perform an action or a set of actions.

- The machine only provides the best option, and human must perform an action or a set of actions.

- The machine performs an action with human approval.

- The machine performs an action in the restricted time.

- The machine performs an action automatically, then necessarily informs the human.

- The machine performs an action automatically, then informs the human only when it is asked.

- The machine performs an action automatically, then informs the human if it decides to.

- The machine does everything and ignore the human.

Boyd developed the Observe-Orient-Decide-Act (OODA) loop [9] to model how soldiers in the military autonomously make decisions on the battlefield.

By combining the 10 levels of human-machine automation with the OODA loop, NASA developed a matrix to assess the autonomy levels of modules in their flight management system (see Figure 4). This matrix reveals that autonomy levels can be distributed across four dimensions. When applied to modeling an autonomous agent, the agent is considered fully autonomous if it reaches level 8 in all stages.

References

- Turing, A.M., 1936. On computable numbers, with an application to the Entscheidungsproblem. J. of Math, 58(345-363), p.5.

- Maes, P., 1993. Modeling adaptive autonomous agents. Artificial life, 1(1_2), pp.135-162.

- Du, H., 2024. Agent and Multi-Agent System. terminology.

- Luck, M. and d'Inverno, M., 1995, June. A Formal Framework for Agency and Autonomy. In Icmas (Vol. 95, pp. 254-260).

- Dickinson, L., 1995. Autonomy and motivation a literature review. System, 23(2), pp.165-174.

- Deci, E.L. and Ryan, R.M., 2013. Intrinsic motivation and self-determination in human behavior. Springer Science & Business Media.

- Proud, R.W., Hart, J.J. and Mrozinski, R.B., 2003. Methods for determining the level of autonomy to design into a human spaceflight vehicle: a function specific approach (No. JSC-CN-8129).

- Sheridan, T.B., 1992. Telerobotics, automation, and human supervisory control. MIT press.

- Boyd, J.R., 1996. The essence of winning and losing. Unpublished lecture notes, 12(23), pp.123-125.